Continued AI breakthroughs, particularly in generative AI, have resulted in heightened attention on the AI industry in recent years and present an opportunity for crypto projects that sit at the intersection between the two. We previously covered some possibilities for the industry in an earlier report in June 2023, noting that the overall capital allocation in crypto appeared under-indexed on AI. The crypto-AI space has since grown tremendously, and we think it’s important to highlight certain practical challenges that could stand in its path to widespread adoption.

Rapid changes in AI make us cautious of bold claims that crypto-focused platforms are uniquely positioned to disrupt the industry, making the path towards long-term and sustainable value accrual to most AI tokens uncertain in our view, especially for those on a fixed tokenomic model. On the contrary, we believe some of the emerging trends in the AI sector could actually make it more difficult for crypto-based innovations to gain adoption in light of broader market competition and regulations.

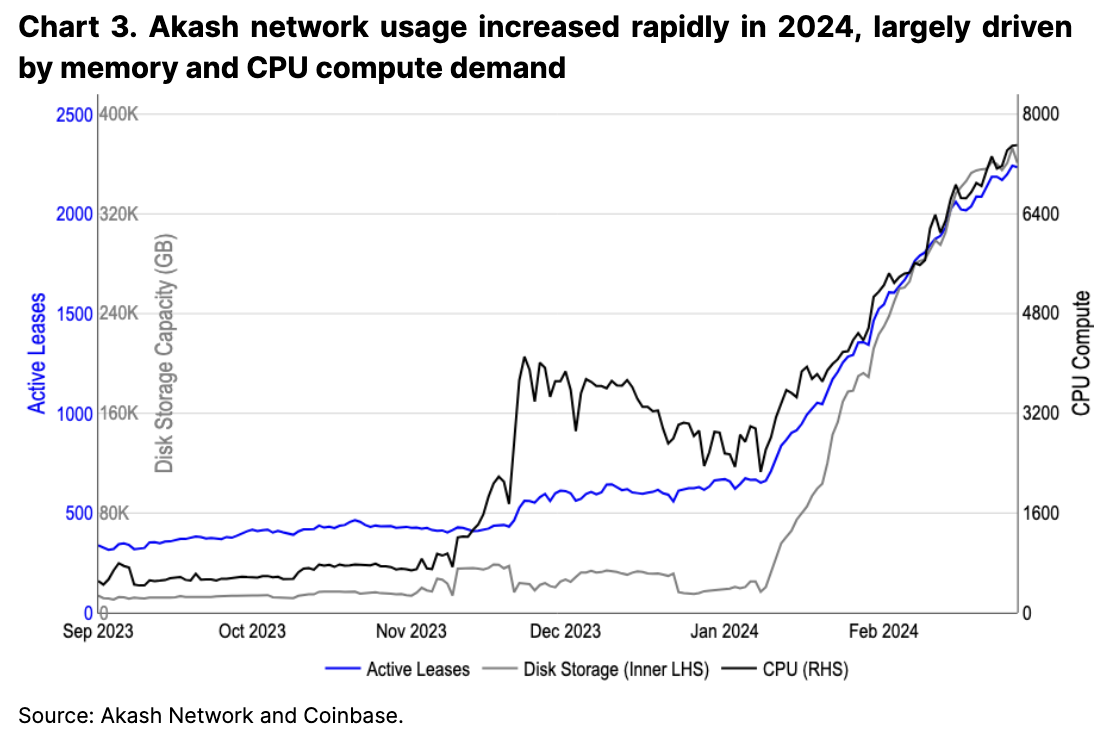

That said, we believe the intersection between AI and crypto is wide-ranging and has varying opportunities. Certain sub-sectors may see a more rapid pace of adoption, though many such areas lack tradeable tokens. Still, that does not appear to have hampered investor appetite. We find that the performance of AI-related crypto tokens are supported by AI market headlines and can support positive price movements even on days where bitcoin trades lower. Thus, we believe that many AI-related tokens could continue to be traded as a more general proxy to AI progress.

Key Trends in AI

One of the most important trends in the AI sector (relevant to crypto-AI products), in our view, is the continued culture around open sourcing models. More than 530,000 models are publicly available on Hugging Face (a platform for collaboration in the AI community) for researchers and users to operate and fine-tune. Hugging Face’s role in AI collaboration is not dissimilar to relying on Github for code hosting or Discord for community management (both of which are used widely in crypto). We don’t think this is likely to change in the near future barring gross mismanagement.

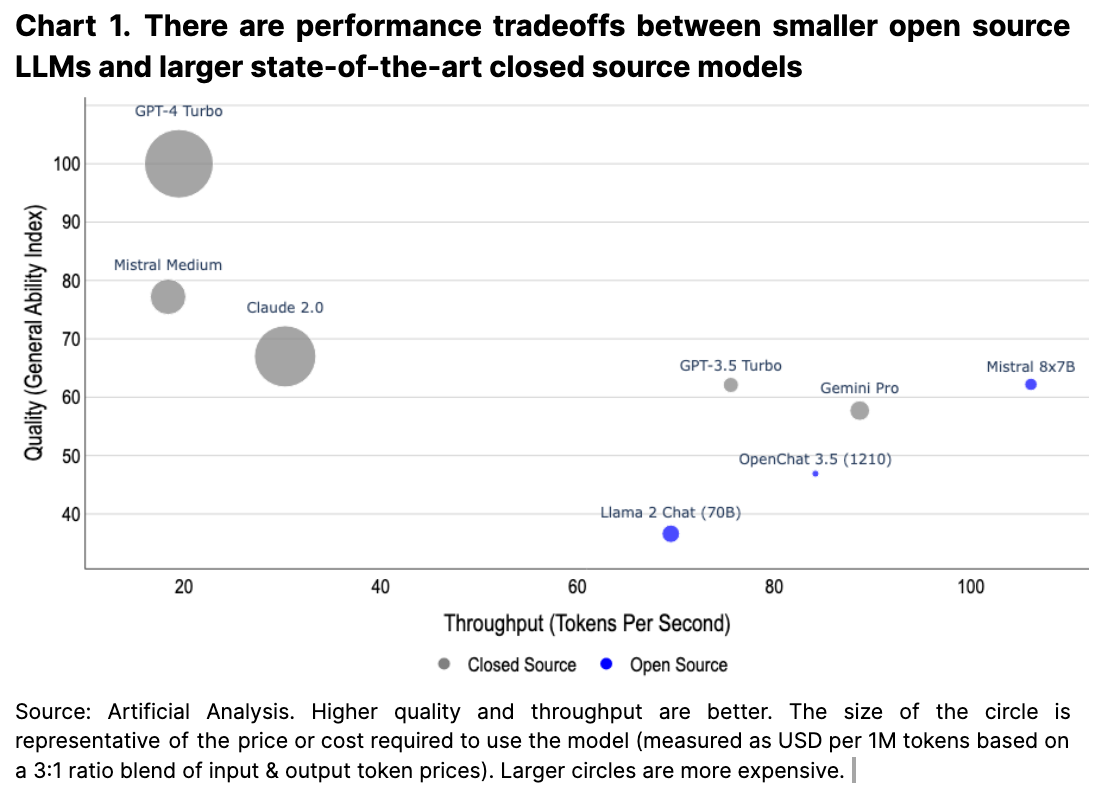

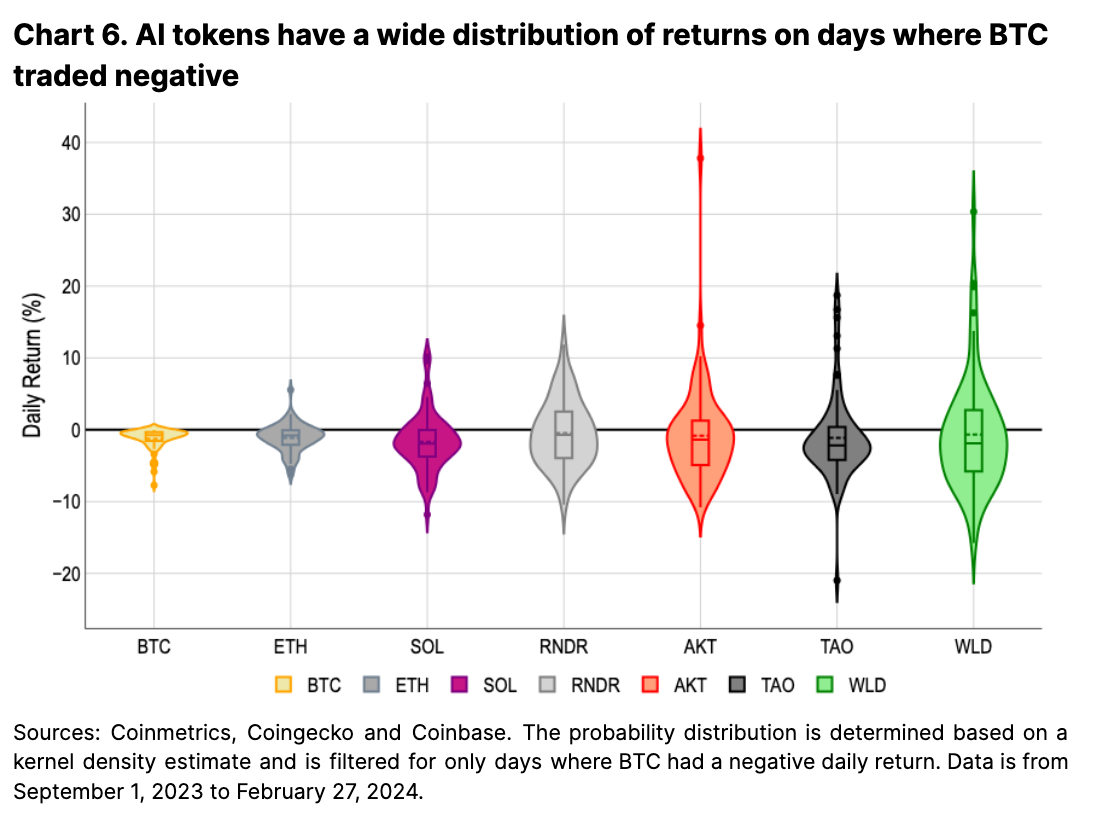

Models available on Hugging Face range from large language models (LLMs) to generative image and video models and include creations from major industry players such as OpenAI, Meta, and Google to those from solo developers. Some of the open source language models even have performance advantages over state-of-the-art closed source models in throughput (whilst retaining comparable output quality), which ensures a certain level of competition between both open source and commercial models (see Chart 1). Importantly, we think this vibrant open source ecosystem, in conjunction with a competitive commercial sector, is already enabling an industry where non-performant models are competed away.

A second trend is the increasing quality and cost-effectiveness of smaller models (highlighted in LLM research as early as 2020 and also more recently in a paper by MIcrosoft), which also dovetails with the open source culture to further enable a future of performant, locally-run AI models. Some fine tuned open source models can even outperform leading closed source models under certain benchmarks. In such a world, some AI models can be locally run and thus maximally decentralized. Certainly, incumbent technology companies will continue to train and run larger models on the cloud, but there will be tradeoffs in the design space between the two.

Separately, given the increasingly complex task of AI model benchmarking, (which includes data contamination and varying test scopes), we think that generative model outputs may ultimately be best evaluated by end users in a free market. In fact, there are existing tools available for end users to compare model outputs side-by-side as well as benchmarking companies that do the same. A cursory understanding of the difficulty with generative AI benchmarking can be seen in the variety of open LLM benchmarks that are constantly growing and include MMLU, HellaSwag, TriviaQA, BoolQ, and more – each testing different use cases such as common sense reasoning, academic topics, and various question formats.

A third trend we observe in the AI space is the ability for existing platforms with strong user lock-ins or concrete business problems to benefit disproportionately from AI integrations. For example, Github Copilot’s integration with code editors augments an already powerful developer environment. Embedding AI interfaces into other tools ranging from mail clients to spreadsheets to customer relationship management software also follow as natural use cases for AI (e.g. Klarna’s AI assistant doing 700 full-time agents’ worth of work).

It’s important to note, however, that in many such scenarios, AI models do not lead to new platforms, but rather only augment existing ones. Other AI models which improve traditional business processes internally (e.g. Meta’s Lattice which restored its ads performance after Apple’s App Tracking Transparency rollout) also often rely on proprietary data and closed systems. These types of AI models are likely to remain closed-source due to their vertical integration into a core product offering and usage of proprietary data.

Within the AI hardware and compute space, we see two additional pertinent trends. First is a transition of computational usage from training to inferencing. That is, when AI models are first developed, a lot of computational resources are used to "train" the models by feeding them large datasets. That has now shifted towards deploying and making queries to the model.

Nvidia’s February 2024 earnings call revealed that approximately 40% of their business is inferencing, and Sataya Nadella made similar remarks in the Microsoft earnings call a month prior in January, noting that “most” of their Azure AI usage was for inferencing. As this trend continues, we think that entities seeking to monetize their models will prioritize platforms that can reliably run models in a secure and production-ready manner.

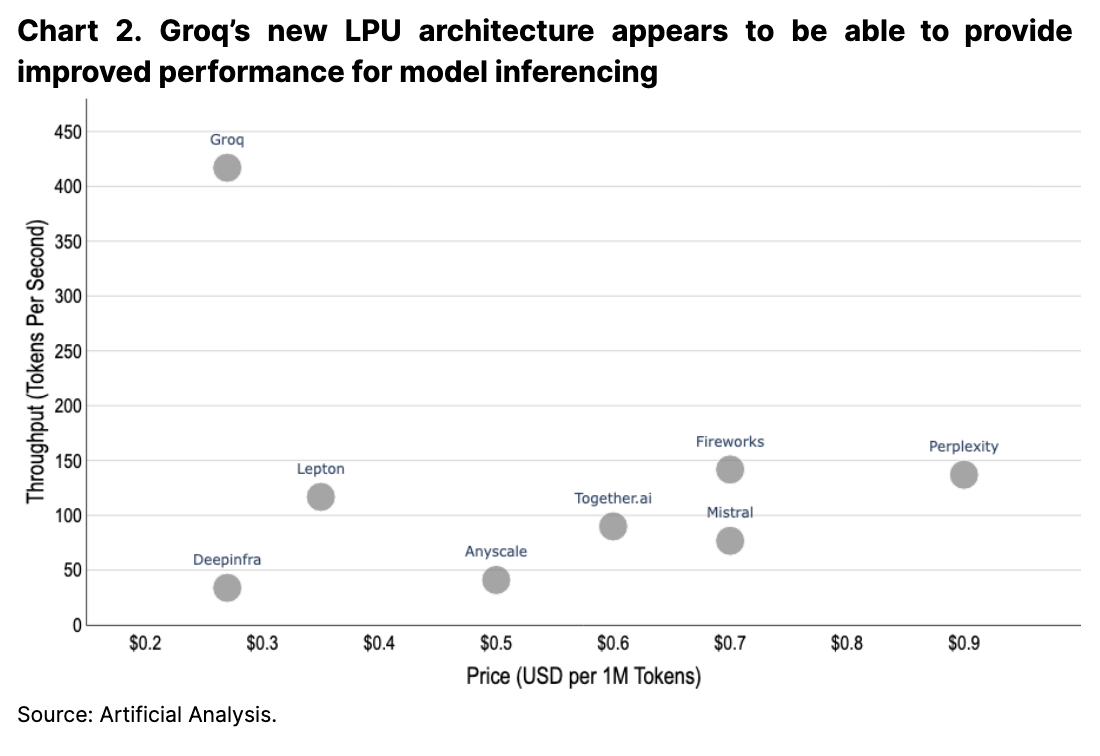

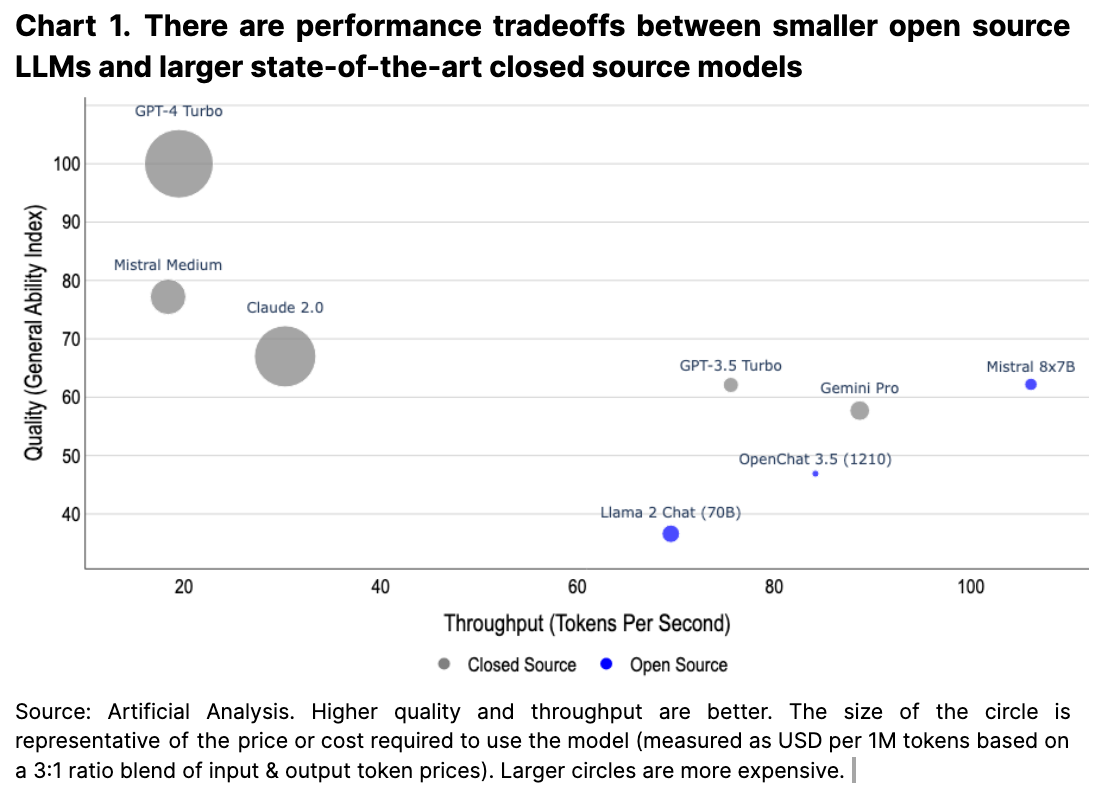

A second major trend we see is a competitive landscape around hardware architecture. Nvidia’s H200 processors will be available starting 2Q24, with the following generation B100 expected to further double performance. Additionally, Google’s continued backing of its own tensor processing units (TPUs) and Groq’s newer language processing units (LPUs) may also increase their market share in the following years as alternatives in the space (see Chart 2). Such developments could shift the cost dynamics in the AI industry, and could benefit cloud providers that can pivot quickly, procure hardware en masse, and set up any associated physical networking requirements and developer tooling.

Overall, the AI sector is a nascent and rapidly growing field. ChatGPT was first released to the market less than 1.5 years ago in November 2022 (even though its underlying GPT-3 model had existed since June 2020), and the rapid progress of the space since has been nothing short of astounding. Although there has been some questionable behavior around the biases behind some generative AI models, we’ve begun to see that poorer performing models are disregarded by the market in favor of better alternatives. The rapid evolution of the sector and possibility of incoming regulations mean that the problem spaces of the industry are regularly changing as new solutions come to market.

The often touted blanket remedy that “decentralization fixes [insert problem]” as a foregone conclusion is, in our view, premature for such a rapidly innovating field. It is also preemptively solving for a centralization problem that may not necessarily exist. The reality is that the AI industry already has a lot of decentralization in both technology and business verticals through competition between many different companies and open source projects. Moreover, truly decentralized protocols at a technological and social level move slower than centralized counterparts by nature of their decision making and consensus process. This may pose impediments to products seeking to balance decentralization and feature competitiveness at this phase of AI development. That said, we do think there are some synergies between crypto and AI that could meaningfully materialize, particularly over longer time horizons.

Scoping the Opportunity

Broadly speaking, we view the intersection of AI and crypto in two general categories. The first are use cases where AI products improve the crypto industry. This includes scenarios ranging from creating human-readable transactions and improving blockchain data analytics to utilizing model outputs onchain as part of permissionless protocols. The second category are use cases where crypto aims to disrupt traditional AI pipelines via decentralized methods for compute, validation, identity, and more.

The use cases for business-aligned scenarios in the former category are clear in our view, and we believe there is also long-term promise in the more complicated scenarios of inferencing models onchain despite the significant technical challenges that remain. Centralized AI models can improve crypto much as it would any other tech-centric industry, from improving developer tools and code auditing to turning human language into onchain actinos. But investments in this area typically accrue to private corporations via venture funding, and as a result, are generally overlooked by public markets.

What’s less certain to us, however, is the value proposition of the second category (i.e. the means by which crypto disrupts existing AI pipelines). Challenges in the latter category supersede technical ones (which we think are generally solvable in the long term) and are an uphill battle against broader market and regulatory forces. That said, much of the recent attention on AI and crypto has been on this category as those use cases lend themselves better to having liquid tokens. This is our focus in the next section as there are comparatively fewer liquid tokens relating to centralized AI tooling in crypto (for now).

Crypto’s Role in the AI Pipeline

At the risk of oversimplification, we view crypto’s potential impact on AI across four broad stages of the AI pipeline: (1) the collection, storage, and engineering of data, (2) the training and inferencing of models, (3) the validation of model outputs, and (4) the tracking of outputs from AI models. There has been a bevy of new crypto-AI projects across these sectors, though we think many will face critical challenges in demand side generation and fierce competition from both centralized companies and open source solutions in the short to medium term.

Proprietary Data

Data is the foundation of all AI models and is perhaps the key differentiator for specialized AI model performance. Historical blockchain data itself is a new rich data source for models, and certain projects like Grass also aim to leverage crypto incentives to curate new datasets from the open internet. In this regard, crypto has the opportunity to provide industry specific datasets as well as to incentivize the creation of new valuable datasets. (Reddit’s recent $60M annual data licensing deal with Google foreshadows a future of increased dataset monetization in our view.)

Many earlier models like GPT-3 used a mixture of open datasets like CommonCrawl, WebText2, books, and Wikipedia with similar datasets freely available on Hugging Face (currently hosting over 110,000 options). However, many recent closed source models have not been as forthcoming with their final training dataset compositions, likely in a bid to protect commercial interests. We think the trend towards proprietary datasets, particularly in commercial models, will continue and lead to an increase in the importance of the data licensing.

Existing centralized data marketplaces are already helping to bridge the gap between data providers and consumers, which we think sandwiches the opportunity space of newer decentralized data marketplace solutions between open source data directories and corporate competitors. Without the backing of legal constructs, a purely decentralized data marketplace additionally needs to build standardized data interfaces and pipelines, verify data integrity and provisioning, and resolve the cold start problem for its offerings – all whilst balancing token incentives across market participants.

Separately, decentralized storage solutions may also eventually find a niche in the AI industry, though we think there still are meaningful challenges on that front as well. On the one hand, pipelines for distributing open source datasets already exist and are widely used. On the other hand, the owners of many proprietary datasets have stringent security and compliance requirements. There currently exists no regulatory pathways to host sensitive data on decentralized storage platforms like Filecoin and Arweave. In fact, many enterprises are still transitioning from on-premise servers to centralized cloud storage providers. At a technical level, the decentralized nature of these networks is also currently incompatible with certain geolocation and physical data silo requirements for sensitive data storage.

While price comparisons have also been made between decentralized storage solutions and mature cloud providers that indicate decentralization is cheaper per storage unit, we think this misses the bigger picture. First, there are upfront costs associated with migrating systems between providers that need to be considered beyond daily operational expenses. Second, crypto-based decentralized storage platforms would need to match the better tooling and integrations of mature cloud systems which have developed over the past two decades. From a business operations perspective, cloud solutions also have more predictable costs, provide contractual obligations and dedicated support teams, and also have a large pool of existing developer talent.

It’s also worth noting that cursory comparisons to the “big three” cloud providers only (Amazon Web Services, Google Cloud Platform, and Microsoft Azure) are incomplete. There are dozens of lower-cost cloud companies also fighting for market share by offering cheaper, bare-bones server racks. In our view, these are the real near-term primary competitors for cost-sensitive consumers. That said, recent innovations like Filecoin’s compute-over-data and Arweave’s ao computing environment could be poised to play a role for upcoming greenfield projects that utilize less sensitive datasets or for the most cost-sensitive (likely smaller) companies that are not yet vendor locked.

Thus, while there is certainly room for novel crypto-enabled products in the data space, we think that the nearest term disruption will occur where they can generate unique value propositions. Areas where decentralized products are competing head-to-head against traditional and open source competitors will take significantly more time to progress in our view.

Training and Inferencing Models

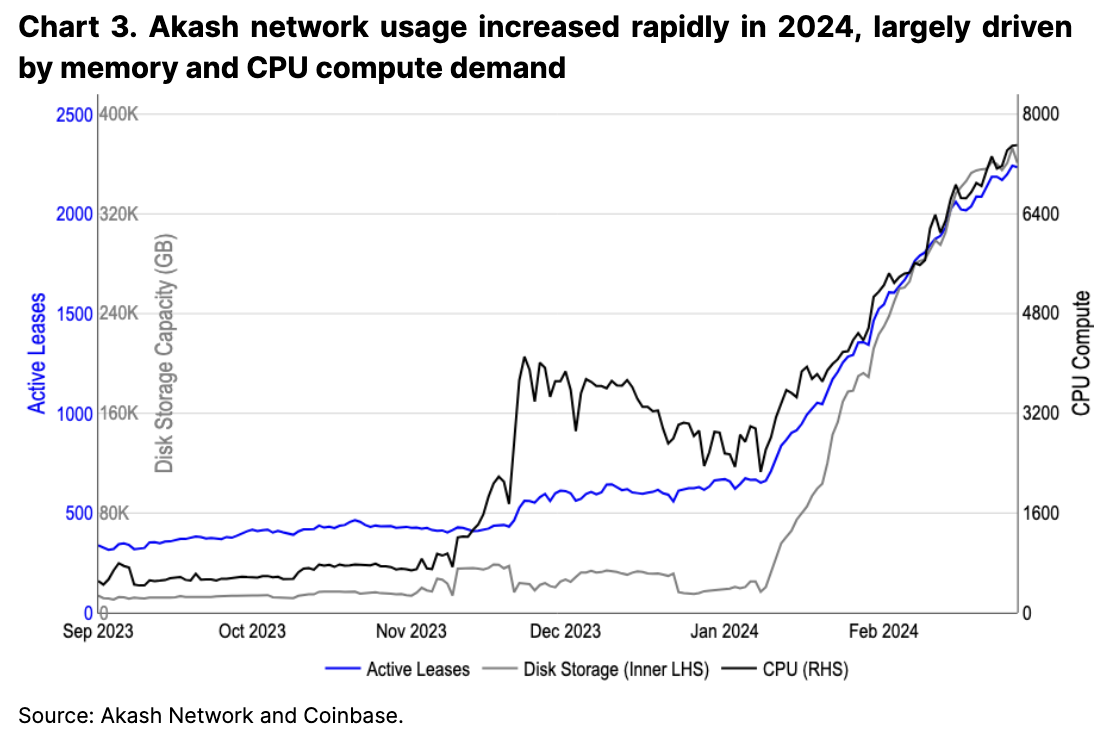

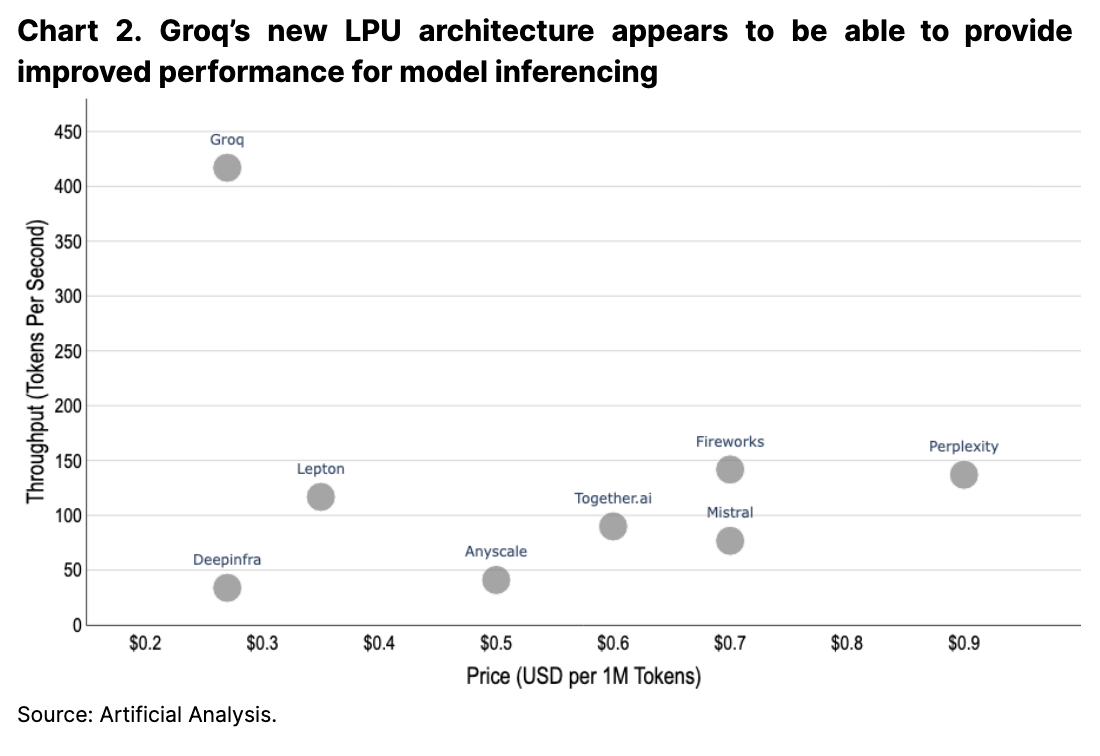

The decentralized compute (DeComp) sector of crypto also aims to be an alternative to centralized cloud computation in part because of the existing GPU supply crunch. One proposed solution to this shortage, embraced by protocols like Akash and Render, is to repurpose idle computing resources in a collective network and at a reduced cost to centralized cloud providers. Based on preliminary metrics, such projects appear to be gaining increased usage both on the user and supplier fronts. For example, Akash has seen a three fold increase in active leases (i.e. number of users) year to date (see Chart 3), largely driven by increased usage of their storage and compute resources.

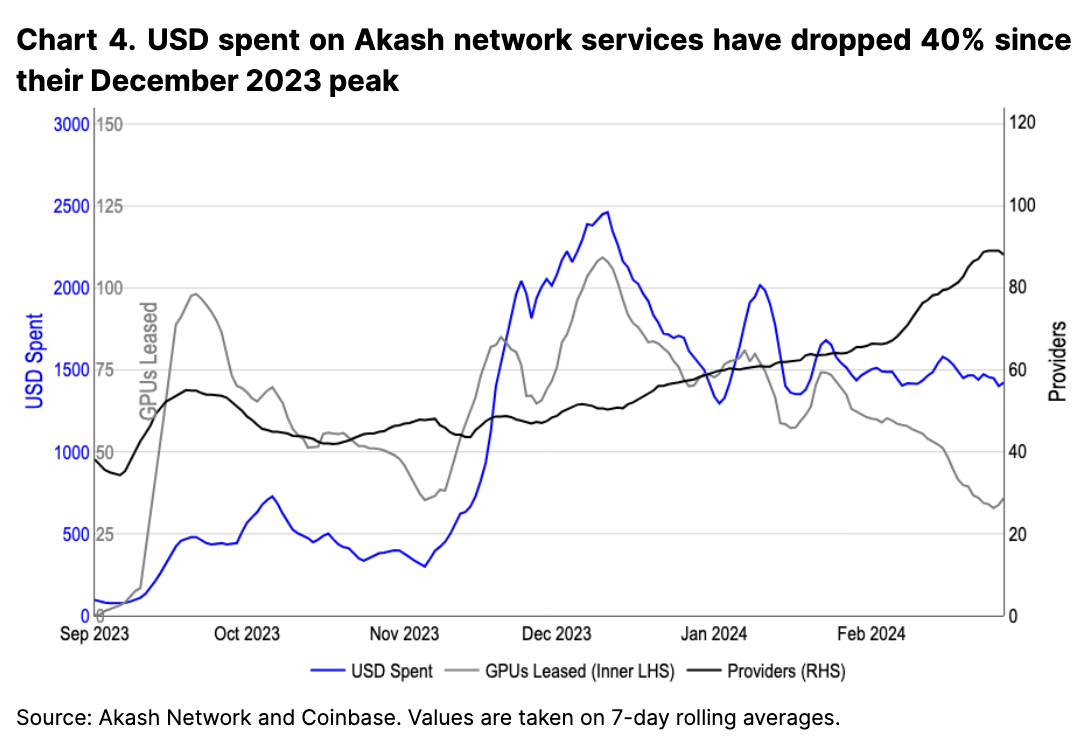

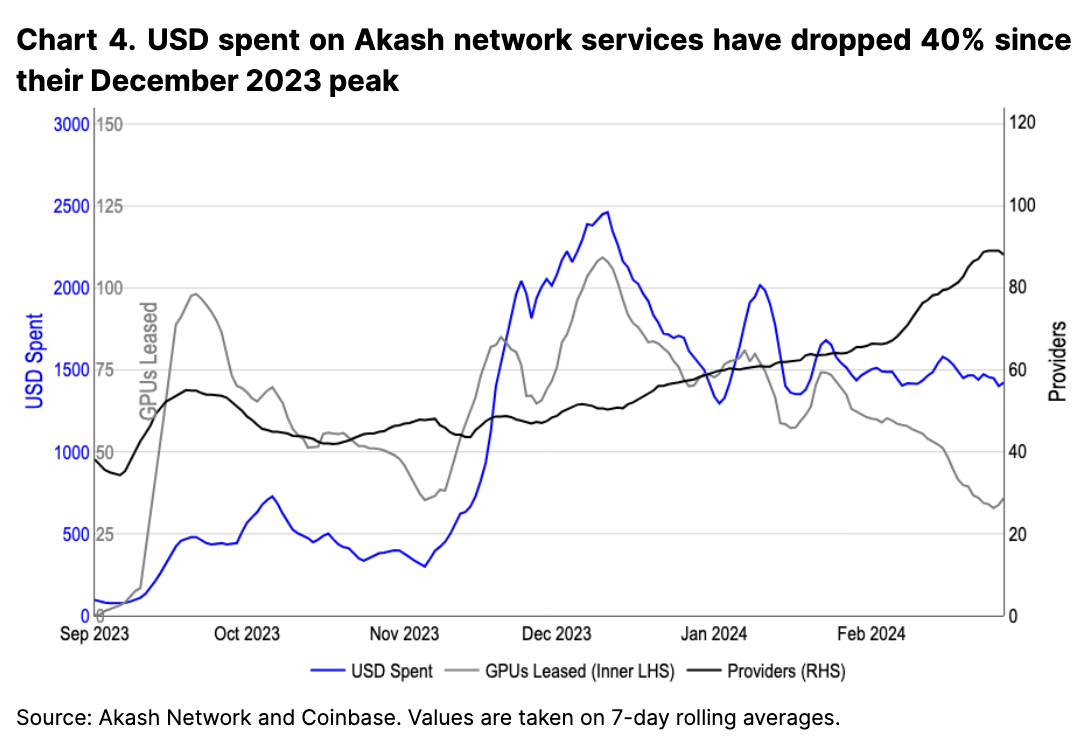

However, fees paid to the network have actually dropped since their December 2023 peak as the supply of GPUs available has outpaced the demand growth for those resources. That is, as more providers have joined the network, the number of GPUs leased (which appear to be the largest revenue drivers proportionally) has dropped (see Chart 4). For networks where compute pricing can change based on supply and demand variations, it is unclear to us where persistent, usage-driven demand to native tokens will ultimately arise if supply-side growth outpaces that on the demand side. We think it’s likely that such tokenomic models may need to be revisited in the future to optimize for changes in the marketplace, though the long term impacts of such changes are currently unknown.

On a technical level, decentralized compute solutions also suffer from the challenge of network bandwidth limitations. For large models requiring multi-node training, the physical network infrastructure layer plays a critical role. Data transfer speeds, synchronization overhead, and support for certain distributed training algorithms means that specific network configurations and custom networking communications (like InfiniBand) are required to facilitate performant execution. This is difficult to accomplish in a decentralized manner beyond a certain cluster size.

On the whole, we think that the long term success of decentralized compute (and storage) faces strong competition from centralized cloud providers. In our view, any adoption would be a multi-year process akin to cloud adoption timelines at the very least. Given the increased technical complexities of decentralized network development combined with absence of a similarly scalable development and sales team, we believe that fully executing on the decentralized computation vision will be an uphill journey.

Validating and Trusting Models

As AI models become more central in our lives, there is growing concern over their output quality and biases. Certain crypto projects aim to find a decentralized, market-based solution to this problem by leveraging a suite of algorithms to evaluate outputs across different categories. However, the aforementioned challenges around model benchmarking, as well as apparent cost, throughput, and quality tradeoffs makes head-to-head comparisons challenging. BitTensor, one of the largest AI-focused cryptocurrencies in this category, aims to address this problem, though there are a number of technical challenges outstanding that could hinder its widespread adoption (see Appendix 1).

Separately, trustless model inferencing (i.e. proving model outputs were, in fact, generated by the model claimed) is another area of active research in the crypto-AI overlap. However, we think such solutions could face challenges on the demand side as open source models shrink in size. The role of trustless inferencing is less clear in a world where models can be downloaded and run locally and with the content integrity verified via the well established file hash/checksum methodology. Admittedly, many LLMs are not yet able to be trained and operated from lightweight devices like a mobile phone, but a beefy desktop computer (like those used for high end gaming) can already be used to run many performant models.

Data Provenance and Identity

The importance of tracking AI-generated content is also becoming top of mind as the outputs of generative AI become increasingly indistinguishable from that of humans. GPT-4 passes the Turing test at 3 times the rate of GPT-3.5, and it seems almost inevitable that we will reach a day where online personas are indistinguishable between bots and humans. In such a world, determining the humanity of online users as well as watermarking AI generated content will be critical functionalities.

Decentralized identifiers and proof-of-personhood mechanics like Worldcoin purport to solve the former issue of identifying humans onchain. Similarly, publishing data hashes to a blockchain can assist in data provenance by verifying the age and origin of content. However, in a similar vein to earlier sections, we think the viability of crypto-based solutions must be weighed against centralized alternatives.

Some countries, such as China, link online personhood to a government controlled database. Although much of the rest of the world is not nearly as centralized, a coalition of know-your-customer (KYC) providers can also provide proof-of-personhood solutions separate from blockchain technology (perhaps in a similar fashion to trusted certificate authorities who form the bedrock of internet security today). There is also ongoing research on AI watermarks to embed hidden signals in both text and image outputs to allow algorithms to detect whether content has been AI generated. Many leading AI companies including Microsoft, Anthropic, and Amazon have publicly committed to adding such watermarks to their generated content.

Additionally, many existing content providers are already trusted to keep rigorous records of content metadata for compliance reasons. As a result, users often trust the metadata associated with a social media posting (though not its screenshot) even though they are centrally stored. An important note here, is that any crypto-based solution to data provenance and identity would additionally require integration with user platforms to be broadly effective. Thus, while crypto-based solutions to prove identity and data provenance are technically feasible, we also think that their adoption is not a given and will ultimately come down to business, compliance, and regulatory requirements.

Trading the AI Narrative

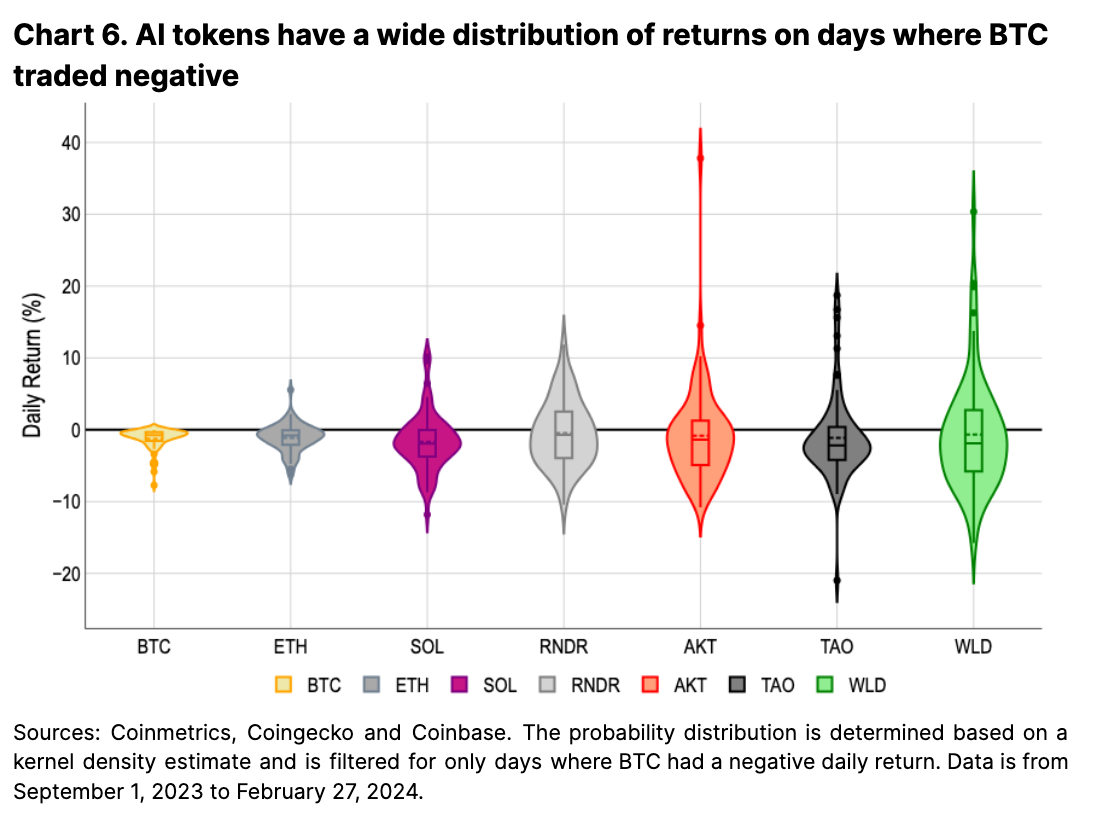

Despite the aforementioned overheads, many AI tokens have outperformed both bitcoin and ether and also major AI equities like Nvidia and Microsoft starting in 4Q23. We think this is because AI tokens generally benefit from strong associated performance in both the broader crypto market as well as related AI news headlines (see Appendix 2). As a result, AI-focused tokens can experience upward price fluctuations even when bitcoin prices fall, which results in upside volatility during bitcoin drawdown periods. Chart 5 visualizes the dispersion of AI tokens on days where Bitcoin traded downwards.

As a whole, however, we still think many near term persistent demand drivers are missing in the AI narrative trade. A lack of clear adoption forecasting and metrics have enabled a wide range of memetic speculation which may not be long-term sustainable in our view. Eventually, price and utility will converge – the open question is how long it will take and whether utility will rise to meet price or vice versa. That said, we do think that a continued constructive crypto market and outperforming AI sector could sustain a strong crypto-AI narrative for some time.

Conclusion

Crypto’s role in AI does not exist in a vacuum – any decentralized platform is competing against existing centralized alternatives and must be analyzed in the context of broader business and regulatory requirements. Thus, supplanting centralized providers purely for the sake of “decentralization” is insufficient, in our view, to drive meaningful adoption. Generative AI models have existed for a handful of years, and already retain a certain level of decentralization due to market competition and open source software.

A recurring theme throughout this report has been to acknowledge that crypto-based solutions, while often technically feasible, still require an enormous amount of work in order to reach feature parity with more centralized platforms – and said platforms are not going to remain stagnant in the interim. In fact, centralized development is often faster than decentralized development due to consensus mechanisms, which can pose challenges in a fast evolving field like AI.

In light of this, we think the AI and crypto overlap is still in its nascent stages and is likely to change rapidly in the coming years as the broader AI sector develops. A decentralized AI future, as it is currently envisioned by many in the crypto industry, is not guaranteed – in fact, the future of the AI industry itself is still largely undetermined. As a result, we think it prudent to navigate such a market carefully and more deeply examine how crypto-based solutions can truly provide a meaningfully better alternative, or at the very least, understand the underlying trading narrative.

Appendix 1: BitTensor

BitTensor incentivizes different intelligence markets across its 32 subnets. This aims to solve some of the problems with benchmarking by enabling subnet owners to create game-like constraints to extract intelligence from information providers. For example, its flagship Subnet 1 centers around text prompting and incentivizes miners who “produce the best prompt completions in response to the prompts sent by the subnet validators in that subnet.” That is, it rewards miners who can generate the best text responses to given prompts, as judged by other validators in that subnet. This has enabled an intelligence economy of network participants trying to create models across various markets.

However, such validation and reward mechanisms are still in their early phases and open to adversarial attacks, particularly if models are evaluated with yet other models that contain biases (though progress has been made on that front with fresh synthetic data used for evaluation on certain subnets). This is particularly true for “fuzzy” outputs like language and art where evaluation metrics can be subjective – hence the rise of multiple benchmarks for model performance.

For example, BitTensor’s Subnet 1’s validation mechanism, in practice, requires:

the validator [to] produce one or more reference answers which all miner responses are compared to. Those which are most similar to the reference answer will attain the highest rewards and ultimately gain the most incentive.

The current similarity algorithm uses a combination of string literal and semantic matching as the basis for rewards, but accounting for varying stylistic preferences is difficult to capture with a limited set of reference answers.

It’s also unclear if models arising from BitTensor’s incentive structure will ultimately be able to outperform centralized models (or whether the top performing models would move to BitTensor), or how it would be able to accommodate for other tradeoff factors such as size and underlying computational costs. A freely accessible market where users can choose to use models that suit their preferences may be able to achieve similar resource allocations via the “invisible hand”. That said, BitTensor does attempt to solve an extremely challenging problem in an expanding problem space.

Appendix 2: WorldCoin

Perhaps the clearest example of AI tokens tracking AI market headlines is the case of Worldcoin. It released its World ID 2.0 upgrade on December 13, 2023 that went mostly unnoticed, yet made a 50% move upwards following Sam Altman’s promotion of Worldcoin on December 15. (Conjectures around Worldcoin’s future remain rampant partially because Sam Altman is the cofounder of Tools for Humanity, the developer behind Worldcoin.) Similarly, OpenAI’s Sora release on February 15, 2024 led to a nearly threefold gain in price despite no related announcements on Worldcoin’s Twitter or blog (see Chart 6). As of publishing, the fully diluted valuation of Worldcoin sits at $80B – similar to that of OpenAI’s $86B February 16 valuation (a company generating $2B of annualized revenue).