Introduction

The implications of generative artificial intelligence (AI) on how people live and work span the range from transformative to controversial. At its best, this technology promises to boost productivity and potentially lower the costs of goods and services through task automation. At its worst, it may breed disinformation and fraud, potentially adding to the costs of litigation, monitoring, auditing and other forms of operational complexity.

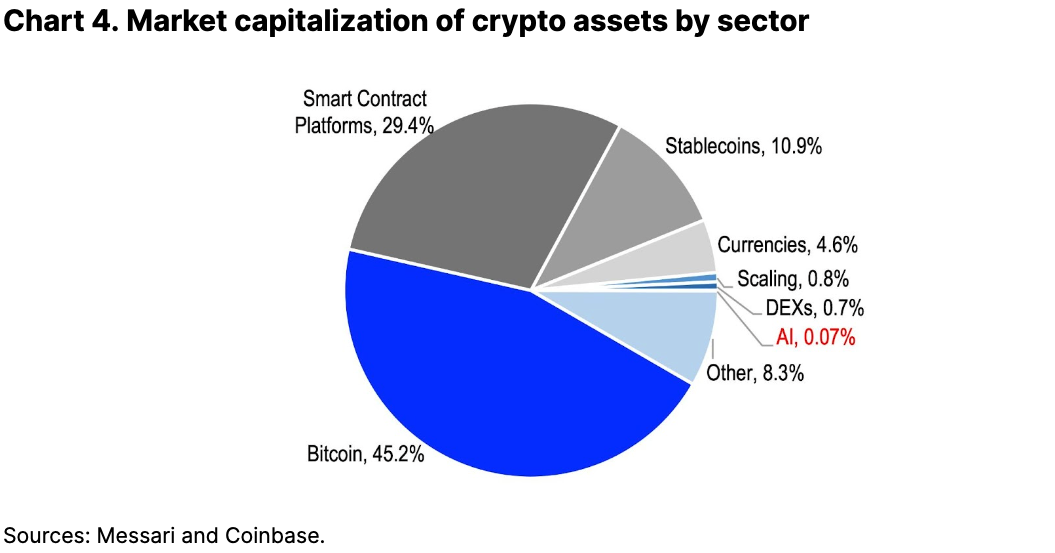

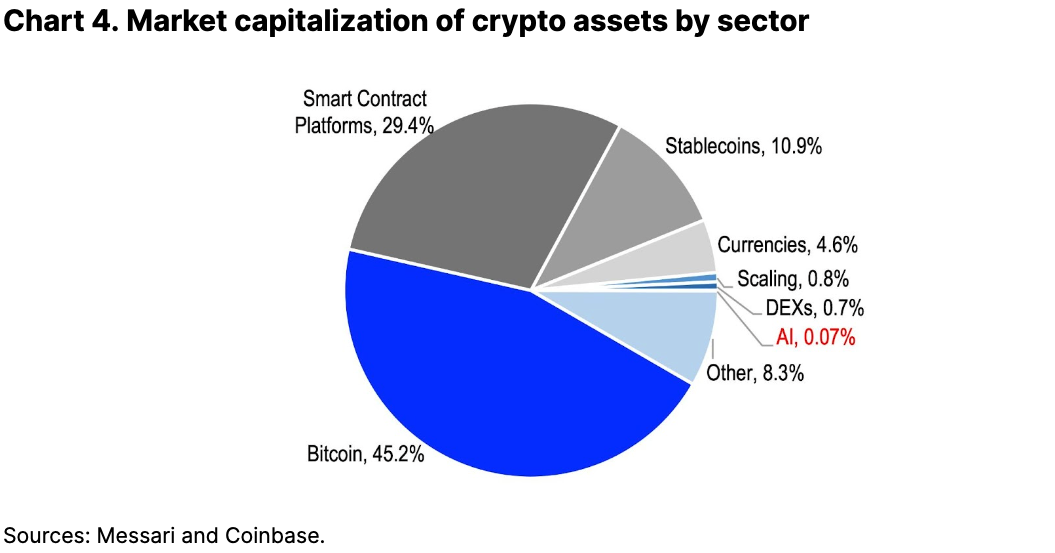

The landscape for how AI and crypto currently interact (or could interact in the future) is vast. The market capitalization of crypto projects directly involved in AI today totals US$772M representing only 0.07% of the total crypto market cap, according to Messari. But that figure rises to around $2.85B (as of end-May) if we include projects that have incorporated or are incorporating AI into their capabilities and/or services offerings in some form. Currently, some of these AI-related crypto projects attempt to use decentralized solutions to address issues of data accessibility or computational resourcing to support AI progress.

However, blockchain technology could also be used to address problems like verifying the authenticity of online content by capturing the lifecycle of content creation in order to distinguish the photos, music, videos and text generated by AI from the media generated by humans. Taking this a step further, blockchains could be used to create an auditable track record of the decision-making processes used by AI algorithms themselves, which otherwise tend to be opaque and cannot be easily scrutinized.

Problem solving or problem to solve?

To oversimplify, generative AI refers to the ability to train algorithms on existing content in order to create new content. More recently, the capabilities of these systems have accelerated, particularly with the advent of ChatGPT, whose large language model is able to produce automated content that largely and convincingly approximates human speech. That said, the complexity of these models often tends to be resource-intensive and time consuming. That can create lags in output generation or only limit AI access to places in the world that can meet such demands.

We believe there are some common issues in generative AI that could be addressed by blockchain technology and crypto protocols. These include:

- Demand for data resources. Generative AI depends on large, generalized (rather than specialized) high-quality databases to tackle a broad array of queries. In fact, the amount of available data to train these models may be one of the biggest limits to their continued improvement. Decentralized data marketplaces could offer these models access to verified, diverse sets of reliable data through agents that communicate and transact with one another. A system of token incentives and penalties could ensure that the data meets the sufficient standards of quality needed by these models.

- Demand for computational resources. Training generative AI models can be computationally expensive, and we believe that demands for more resources are likely to increase in the future. Decentralized networks of computing power can reward users who volunteer their idle GPUs to help relieve some of this computational burden. This can reduce costs and is already being done for other computationally intensive work like video rendering for video games and/or movies.

- Misinformation and disinformation. Data authenticity and disinformation are major issues in the digital age, particularly as AI can create “deepfakes'' that are increasingly difficult to distinguish from their source material (or human-generated content). Blockchain and non-fungible token (NFT) technology can help combat this by establishing the provenance of images, video, music and other media.

- Auditability. The decision-making processes of generative AI algorithms are generally opaque, which makes those processes very difficult to be audited and challenging to refine. Integrating blockchain technology into AI models can help make those processes more transparent and allow users to scrutinize the logic and reliability of AI-produced outcomes.

- Democratizing AI development and use. We believe cryptocurrency platforms can make AI systems more accessible, allowing people to both contribute to its development as well as use globally. The current generative AI market is dominated by only a few large organizations, like OpenAI (the developer of ChatGPT and DALL-E) or Stability AI (the developer of Stable Diffusion). The barriers to entry for new developers may limit the design of these systems from an economic, cultural and/or geographic perspective. Crypto platforms can help source a more diverse set of contributions, as well as redistribute access so more users can take advantage of its benefits (e.g. healthcare advice or e-commerce).

Among these five issues, the first two refer to challenges that may impede or slow the progress of AI development while the latter three refer to the risks that AI technology poses or may pose due to its progress. We discuss these latter risks (particularly number 3) in more detail below.

The disinformation age

The technology to create deepfakes' has become more ubiquitous, and increasingly, AI can fabricate manipulated images or videos that are difficult to distinguish from their original media. In fact, recent controversy has centered on photorealistic imagery created by AI of scenes that never even existed.

To address the problem, academic researchers and technology companies are studying and developing better methods for deepfake detection. Their “endogenous” solutions attempt to identify subtle anomalies in digital images, video, and other media to signal risks of computational manipulation. In other words, this approach trains AI on large datasets to detect inconsistencies in the content … generated by AI. The cycle of error recognition and improvement by AI on both sides of this could advance beyond humans’ ability to easily assess fidelity independently.

While detection technology may improve in the future, models at present are at risk of reporting inaccuracies due to the heuristic nature of the process. Alternatively, we believe blockchain technology has the potential to support these authentication efforts at the “exogenous” level.

For example, non-fungible tokens (NFTs) can be used to track the lifecycle of content creation and verify the legitimacy of that content. When NFTs are minted, they contain a unique identifier recorded on a blockchain, verifying the source of a particular asset and thus its authenticity. Moreover, the blockchain creates a transparent, immutable record of every transaction involving that NFT– a process that can be applied more broadly to multiple forms of online content.

The idea of using this technology to fight misinformation is not new:

- The Research & Development team at The New York Times launched the Media Provenance project back in 2020-21 to explore how blockchain solutions can help combat disinformation via keeping tabs on assets inside trusted information networks.

- The DeepTrust Alliance, a non-profit organization established to develop and promote standards for AI accountability, cited the potential of blockchains to track content creators based on their reputations for accuracy, akin to a form of decentralized identity. [3]

That said, we do not believe that applying blockchain technology in this way is a replacement for improving media literacy and increasing the awareness of data integrity risks when consuming media. While blockchains can prove the source and legitimacy of online content, it doesn’t necessarily enhance the trustworthiness of a given source for example.

AI, WorldCoin and Orbs

But the leaps being made in automated content creation is heralding a faster pace of disinformation creep. Researchers at Georgetown University, OpenAI and the Stanford Internet Observatory suggest that the significant improvement in generative AI more recently has made it easier for malicious actors to propagate disinformation at a higher scale and lower cost.

Generative AI, for example, can help online bots mimic human behavior more convincingly and efficiently. Already these bots can cause real economic harm, including:

- to distort statistics on web traffic,

- to cost companies billions of dollars in the form of lost advertising spend,

- to carry out distributed denial of service (DDoS), spam and phishing attacks,

- to manipulate prices in cryptocurrency or financial markets

A novel approach to countering this problem was introduced in late 2021 by a startup called Worldcoin (Tools for Humanity), co-founded by Sam Altman (the CEO of OpenAI) and backed by Andreessen Horowitz and Coinbase Ventures. The company attracted attention for suggesting that it would offer free digital tokens to users who agree to authenticate their identity via World ID, effectively establishing “proof of personhood.” As of publication, no token has been launched sans a private token sale to backers in March last year. (Note, in early 2023, reports circulated that Worldcoin was aiming for a full launch in 1H23. It has since supported its ecosystem with the release of the World App wallet in early May and secured an additional $115M in Series C funding.)

Proof of humanity would be verified via custom hardware called The Orb, a biometric imaging device that scans the iris and is ostensibly more secure than fingerprints or facial recognition. If successful, this would represent one path towards pseudonymous digital identity and allow users to know if and when they are interacting with other human beings online or if it’s generative AI impersonating a human being. The idea is that this would eventually become a platform on which developers can build unique applications or test economic concepts (like equitably distributing a universal basic income) within a closed system.

Conclusions

We’re still in the early stages of seeing how AI and blockchain technology could interact. The market cap of crypto projects directly developing AI models on blockchain, applying AI to decentralized applications or solving for AI-related issues is still fairly low at only 0.07% of the total across all tokens. Although we would expect this sector of the digital asset market to grow, this is more likely to happen only over the longer term.

Indeed, while the media portrays AI development as a “frantic arms race in the tech industry” (FT), the reality is that global AI private investment fell 27% between 2021 and 2022 (Stanford Institute for Human-Centered AI). In fact, US and global venture funding of AI companies declined sharply by another 27% and 43% QoQ in 1Q23 respectively, according to CB Insights. That said, the moderation in VC investing is not unique to the AI sector, and overall, there’s still a record amount of dry powder that ultimately needs to find a home. We think the intersection of AI and crypto represents an important opportunity for entrepreneurs looking to build in web3.